Western Digital SN640 firmware updates R1110021 and R1410004

Western Digital has released the firmware updates R1110021 (SE/ISE variant) and R1410004 (TCG variant) for SN640 NVMe SSDs. The updates contain conventional ongoing improvements as a maintenance release on the one hand. In addition, the firmware updates also contain a bug fix for timeout errors that could occur in rare cases before.

Problem description

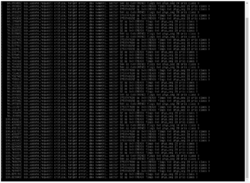

With earlier firmware versions, SSD timeouts can occur in rare cases, which can lead to a SSD failure. The following log excerpt of a Proxmox system with Ceph shows such a case:

[...] Mar 23 23:48:43 node03 kernel: nvme nvme2: I/O 512 QID 23 timeout, aborting Mar 23 23:48:43 node03 kernel: nvme nvme2: I/O 513 QID 23 timeout, aborting Mar 23 23:48:43 node03 kernel: nvme nvme2: I/O 514 QID 23 timeout, aborting Mar 23 23:48:43 node03 kernel: nvme nvme2: I/O 515 QID 23 timeout, aborting Mar 23 23:48:49 node03 ceph-osd[3189]: 2022-03-23T23:48:49.471+0100 7f9d339b1700 -1 osd.11 6687 heartbeat_check: no reply from 192.168.75.92:6808 osd.10 since back 2022-03-23T23:48:23.068194+0100 front 2022-03-23T23:48:23.068173+0100 (oldest deadline 2022-03-23T23:48:48.967935+0100) Mar 23 23:48:50 node03 pvestatd[3341]: VM 213 qmp command failed - VM 213 qmp command 'query-proxmox-support' failed - got timeout Mar 23 23:48:51 node03 pvestatd[3341]: status update time (6.489 seconds) Mar 23 23:48:55 node03 pmxcfs[2768]: [dcdb] notice: data verification successful Mar 23 23:49:14 node03 kernel: nvme nvme2: I/O 512 QID 23 timeout, reset controller Mar 23 23:49:45 node03 kernel: nvme nvme2: I/O 0 QID 0 timeout, reset controller Mar 23 23:50:25 node03 kernel: nvme nvme2: Device not ready; aborting reset, CSTS=0x1 Mar 23 23:50:25 node03 kernel: nvme nvme2: Abort status: 0x371 Mar 23 23:50:25 node03 kernel: nvme nvme2: Abort status: 0x371 Mar 23 23:50:25 node03 kernel: nvme nvme2: Abort status: 0x371 Mar 23 23:50:25 node03 kernel: nvme nvme2: Abort status: 0x371 Mar 23 23:50:35 node03 kernel: nvme nvme2: Device not ready; aborting reset, CSTS=0x1 Mar 23 23:50:35 node03 kernel: nvme nvme2: Removing after probe failure status: -19 Mar 23 23:50:46 node03 ceph-osd[3176]: 2022-03-23T23:50:46.250+0100 7f6ac4847700 -1 bdev(0x56336572e400 /var/lib/ceph/osd/ceph-10/block) _aio_thread got r=-5 ((5) Input/output error) Mar 23 23:50:46 node03 ceph-osd[3176]: 2022-03-23T23:50:46.250+0100 7f6abd029700 -1 bdev(0x56336572ec00 /var/lib/ceph/osd/ceph-10/block) _sync_write sync_file_range error: (5) Input/output error Mar 23 23:50:46 node03 ceph-osd[3176]: 2022-03-23T23:50:46.250+0100 7f6ac3044700 -1 bdev(0x56336572ec00 /var/lib/ceph/osd/ceph-10/block) _aio_thread got r=-5 ((5) Input/output error) Mar 23 23:50:46 node03 ceph-osd[3176]: 2022-03-23T23:50:46.250+0100 7f6ac684b700 -1 osd.10 6687 get_health_metrics reporting 153 slow ops, oldest is osd_op(client.23432791.0:11226906 4.4c 4:3305dcbc:::rbd_data.fa14dd8c319a13.0000000000003910:head [sparse-read 0~4194304] snapc 0=[] ondisk+read+known_if_redirected e6687) Mar 23 23:50:46 node03 kernel: nvme nvme2: Device not ready; aborting reset, CSTS=0x1 Mar 23 23:50:46 node03 kernel: blk_update_request: I/O error, dev nvme2n1, sector 1308340248 op 0x1:(WRITE) flags 0x8800 phys_seg 32 prio class 0 Mar 23 23:50:46 node03 kernel: blk_update_request: I/O error, dev nvme2n1, sector 1852155432 op 0x0:(READ) flags 0x0 phys_seg 32 prio class 0 Mar 23 23:50:46 node03 kernel: blk_update_request: I/O error, dev nvme2n1, sector 2743651928 op 0x0:(READ) flags 0x0 phys_seg 32 prio class 0 Mar 23 23:50:46 node03 kernel: blk_update_request: I/O error, dev nvme2n1, sector 1029435456 op 0x0:(READ) flags 0x0 phys_seg 1 prio class 0 Mar 23 23:50:46 node03 kernel: nvme2n1: detected capacity change from 3750748848 to 0 Mar 23 23:50:46 node03 kernel: blk_update_request: I/O error, dev nvme2n1, sector 1446553816 op 0x1:(WRITE) flags 0x800 phys_seg 2 prio class 0 Mar 23 23:50:46 node03 kernel: blk_update_request: I/O error, dev nvme2n1, sector 690010880 op 0x0:(READ) flags 0x0 phys_seg 1 prio class 0 Mar 23 23:50:46 node03 kernel: blk_update_request: I/O error, dev nvme2n1, sector 1424451176 op 0x1:(WRITE) flags 0x8800 phys_seg 1 prio class 0 Mar 23 23:50:46 node03 kernel: blk_update_request: I/O error, dev nvme2n1, sector 3461494728 op 0x1:(WRITE) flags 0x8800 phys_seg 3 prio class 0 Mar 23 23:50:46 node03 kernel: blk_update_request: I/O error, dev nvme2n1, sector 1424458824 op 0x1:(WRITE) flags 0x8800 phys_seg 1 prio class 0 Mar 23 23:50:46 node03 kernel: blk_update_request: I/O error, dev nvme2n1, sector 1424458984 op 0x1:(WRITE) flags 0x8800 phys_seg 1 prio class 0 Mar 23 23:50:46 node03 kernel: Buffer I/O error on dev dm-1, logical block 180818971, lost async page write Mar 23 23:50:46 node03 kernel: Buffer I/O error on dev dm-1, logical block 180818972, lost async page write [...]

The following errors may occur during a restart attempt:

Solution

An affected SSD can be recovered by formatting it - see section Recovering an SSD by formatting. The SSD's data is lost in the process and has to be recovered.

Afterwards, the firmware has to be updated so that such a problem does not occur again. The following firmware versions fix the problem, we recommend updating all SN640 SSDs with older firmware versions:

| Type | Firmware version | published | download |

|---|---|---|---|

| Western Digital SN640 TCG | R1410004 | 02/2022 | here |

| Western Digital SN640 SE | R1110021 | 04/2021 | |

| Western Digital SN640 ISE | R1110021 | 04/2021 | here |

Update firmware

Linux

Under Linux, the tool nvme-cli can be used to update the firmware. The tool can be installed under Debian and Ubuntu via the package manager. The installation instructions for other supported distributions can be found on GitHub. The new firmware can be installed with the following commands:

apt-get install nvme-cli nvme fw-download /dev/nvmeXY --fw=FW.vpkg nvme fw-commit /dev/nvmeXY -a 1

The new firmware is only active after a reboot of the server.

Example under Proxmox

root@pve:~# apt-get install nvme-cli Reading package lists... Done Building dependency tree Reading state information... Done The following NEW packages will be installed: nvme-cli 0 upgraded, 1 newly installed, 0 to remove and 86 not upgraded. Need to get 0 B/247 kB of archives. After this operation, 570 kB of additional disk space will be used. Selecting previously unselected package nvme-cli. (Reading database ... 46034 files and directories currently installed.) Preparing to unpack .../nvme-cli_1.7-1_amd64.deb ... Unpacking nvme-cli (1.7-1) ... Setting up nvme-cli (1.7-1) ... Processing triggers for man-db (2.8.5-2) ... root@pve:~# nvme fw-download /dev/nvme0 --fw=DCSN640_GR_R1410004.vpkg Firmware download success root@pve:~# nvme fw-commit /dev/nvme0 -a 1 Success committing firmware action:1 slot:0

Check firmware

After rebooting the server, "nvme list" can be used to check whether the new firmware is active.

root@jrag-pve-node02:~# nvme list Node SN Model Namespace Usage Format FW Rev ---------------- -------------------- ---------------------------------------- --------- -------------------------- ---------------- -------- /dev/nvme0n1 A06BFXYZ WUS4BB019D7P3E4 1 1.92 TB / 1.92 TB 512 B + 0 B R1410004 /dev/nvme1n1 A06BFXYZ WUS4BB019D7P3E4 1 1.92 TB / 1.92 TB 512 B + 0 B R1410004 /dev/nvme2n1 A06F1XYZ WUS4BB019D7P3E4 1 1.92 TB / 1.92 TB 512 B + 0 B R1410004 /dev/nvme3n1 A06F1XYZ WUS4BB019D7P3E4 1 1.92 TB / 1.92 TB 512 B + 0 B R1410004

Windows

Section follows.

Linux Skript

A bash script is available in the GitHub repository SN640-FW-Update of Thomas-Krenn.AG, which can be used to update the firmware of all SN640 NVMe SSDs. The script recognizes which type it is based on the firmware revision or whether an older firmware is installed.

root@pve:~# ./FW-Update_SN640.sh Install needed programs (unzip, nvme-cli). nvme-cli is already the newest version (1.7-1). 0 upgraded, 0 newly installed, 0 to remove and 86 not upgraded. Download firmware files Download completed ######################################## #Start update process - 5 NVMe found# ######################################## ------------------------------------------------------------------------------ Firmware or NVMe is not known. Installed FW: 0105 Remaining NVMe: 4 ------------------------------------------------------------------------------ Firmware of /dev/nvme3n1 is current: R1410004 Remaining NVMe: 3 ------------------------------------------------------------------------------ Firmware needs to be updated for /dev/nvme2n1. Installed FW: R1410002 Success activating firmware action:1 slot:0, but firmware requires conventional reset Update to /dev/nvme2n1 completed Remaining NVMe: 2 [...] #################################################################### #Updates completed. Changes will only be active after reboot# ####################################################################

Recover SSD by formatting

If the described "Format Corrupt" has occurred with a NVMe, it can be made ready for use again by formatting the namespace.

| Note: Formatting the namespace does not restore any data. These must be restored by a backup/rebuild, etc. |

|---|

nvme format /dev/nvmeXY

Example

root@pve:~# nvme format /dev/nvme0 Success formatting namespace:ffffffff

If the formatting is successful, no further I/O errors are displayed in the dmesg log after the "rescanning". The NVMe can be used again and integrated into the system.

[...] [ 110.099335] blk_update_request: I/O error, dev nvme0n1, sector 0 op 0x0:(READ) flags 0x0 phys_seg 10 prio class 0 [ 119.176576] blk_update_request: I/O error, dev nvme0n1, sector 0 op 0x0:(READ) flags 0x0 phys_seg 32 prio class 0 [ 119.223403] blk_update_request: I/O error, dev nvme0n1, sector 0 op 0x0:(READ) flags 0x0 phys_seg 17 prio class 0 [ 128.716407] nvme nvme0: rescanning namespaces.

Author: Florian Sebald